Adversarial Machine Learning (AML) is a type of machine learning where two neural networks compete against each other. The first network tries to classify images into one category, while the second network tries to fool it by creating fake examples. This process creates a feedback loop between the two networks, which helps them both improve at the same time.

The goal of adversarial machine learning is to build systems that can detect and prevent attacks based on their knowledge. This includes detecting attacks that are already known (white hat) and unknown (black hat).

The history of adversarial machine learning dates back to the 1980s, but its modern incarnation was sparked by discovering network vulnerabilities in neural networks used for image classification. In 2014, Google researchers found that they could generate adversarial examples — images that looked like normal pictures but were actually manipulated so that they fooled the network into misclassifying them. This led to a flurry of research into methods for generating adversarial examples.

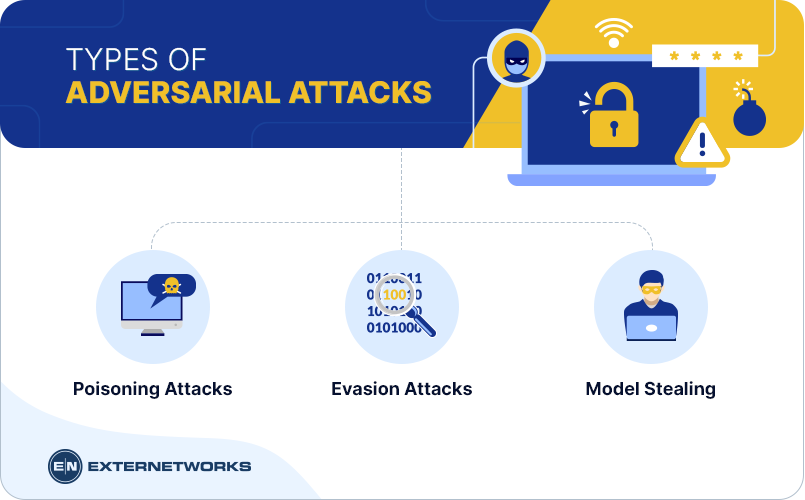

The most common types of adversarial attacks include poisoning (also known as misclassification), evasion, and transferability. Poisoning refers to the modification of input data so that the model makes incorrect predictions. Evasion attacks involve modifying the input data so that the system incorrectly classifies the modified data as belonging to one category but not another. Transferability involves training a model on some data and then using this model to attack different data.

The most common example of this is the “smurf attack,” where a hacker sends a large number of messages to a server, causing it to crash. This is done because the attacker wants to overload the server so that it cannot respond to legitimate users.

An attacker changes the labels of the training data or introduces mislabelled data. This causes the model to make incorrect predictions on the correct labels. In our case, we’re dealing with text classification. We know how to classify a sentence into a category. However, if we change the label of a sample, the classifier will think that it belongs to another category. That means that the attacker can trick the classifier by changing the label of a single sentence.

The goal of evasion attacks is to trick the system into performing actions that would not normally occur. For example, if a malware program has access to a network connection, it could send out false alerts to the user, or even cause the device to connect to a different server. It might also try to hide itself from antivirus software.

A common example of adversarial machine learning is model theft. In this scenario, a company creates a model that predicts whether customers will churn (quit) from their service. The company then sells the model to another firm uses it to predict which customers are most likely to churn, and offers them a discount if they don’t. This means that the original company loses out on potential revenue.

The most common way to combat adversarial attacks is through defense mechanisms. These include adding random noise to inputs, training models with different parameters, and training models with regularization techniques.

Adversarial training is one of the most effective ways to train neural networks. It works by feeding a network with adversarial examples – images that look like natural images but contain small changes that cause the network to misclassify them. The idea behind this approach is that if we can teach the system to spot these subtle differences, it will become better at spotting real threats.

Machine learning models are used in many applications, including fraud detection, healthcare, and autonomous vehicles. The most common way to switch between different models is through cross-validation. This involves training a new model on a subset of the available data and testing it on the remaining data. If the new model performs better than the old one, then it replaces the old model.

Adversarial ML is not new, but it has recently gained attention due to its potential malware detection, spam filtering, and fraud detection applications.

The most important thing you can do to protect yourself from cyberattacks is to not click on links in emails, text messages, or social media posts unless you know who sent them. Don’t click on it if you’re unsure whether something is safe. Instead, go directly to the website where you want to send money or access sensitive information.

Companies are investing more money into protecting machine learning systems. Governments are implementing security standards for machine learning. People should be aware of the dangers posed by machine learning systems.

Cybersecurity experts have warned about the threat of adversarial attacks since the early days of artificial intelligence. They believe that companies’ biggest danger comes from developing advanced technologies without considering how those technologies could be abused.

In an interview with MIT Technology Review, Andrew Ng, co-founder of Baidu’s Deeplearning.ai, said: “I think there is a lot of hype around. I’m not sure what people expect to happen when they use deep learning. We’re still trying to figure that out.”

In 2017, researchers at Google published research showing that attackers could manipulate deep learning algorithms to make them classify objects as weapons instead of flowers.

There are a large variety of adversarial attacks that can attack machine learning systems. Some work on deep learning systems, while others work on traditional machine learning models such as SVMs and linear regression. Most of them aim to fool the machine learning algorithms.

Adversarial machine-learning is the field that studies attacks that aim to deteriorate the performances of classifiers on specific task. Attacks can be mainly classified into three categories: white-box, black-box, and gray-box. White-box attacks allow an adversary to see the internals of a system, while black-box attacks do not. Gray-box attacks allow an attacker to see both the internals and the outputs of a system.

Machine learning presents a new attack surface and raises security risks by increasing the possibility of data corruption, model stealing, and adversarial samples (the ability to trick machines into making incorrect decisions). Organizations using machine learning technologies should be aware of these issues and take precautions to minimize them.